Testing Instructional Design Best Practices with Learners using Qualtrics

PROBLEM

The team was collaborating on a language learning app. Content Design team has come up with various new activity types; among them, one activity with the ability to have learners self-select topics was a considered priority. The development team, located independently in another country, thought differently and proposed an alternative that highlighted pre-selected topics for the users. I was asked to help find a solution to this discussion.

Note: to protect confidential information about unpublished content in the app's, I am omitting pertinent information.

APPROACH

I first reached out to both teams to understand why they had different assumptions of what was best for the learners. Content Design team, with learning scientists that understand the pedagogical impact of learning activities, stated that learner choice was highly related to motivation and engagement, which was well-represented in the self-select-your-topic activity. Development team, more exposed to the user market, explained that since most users were preparing for standardized tests, they would prefer a more direct and authoritative learning method—it'd be especially risky since the development cost for this feature was also high.

Based on the information collected from respective teams, I learned that the conflict lied in different assumptions of users' attitudes toward the two activity patterns. I decided that concept testing would be an effective way to learn about learners' attitudes that would also minimize risk for the project. A few main goals came to mind:

After experiencing one or both of the activity types, would they prefer one type of activity over the other?

How might it impact their decision to purchase the app?

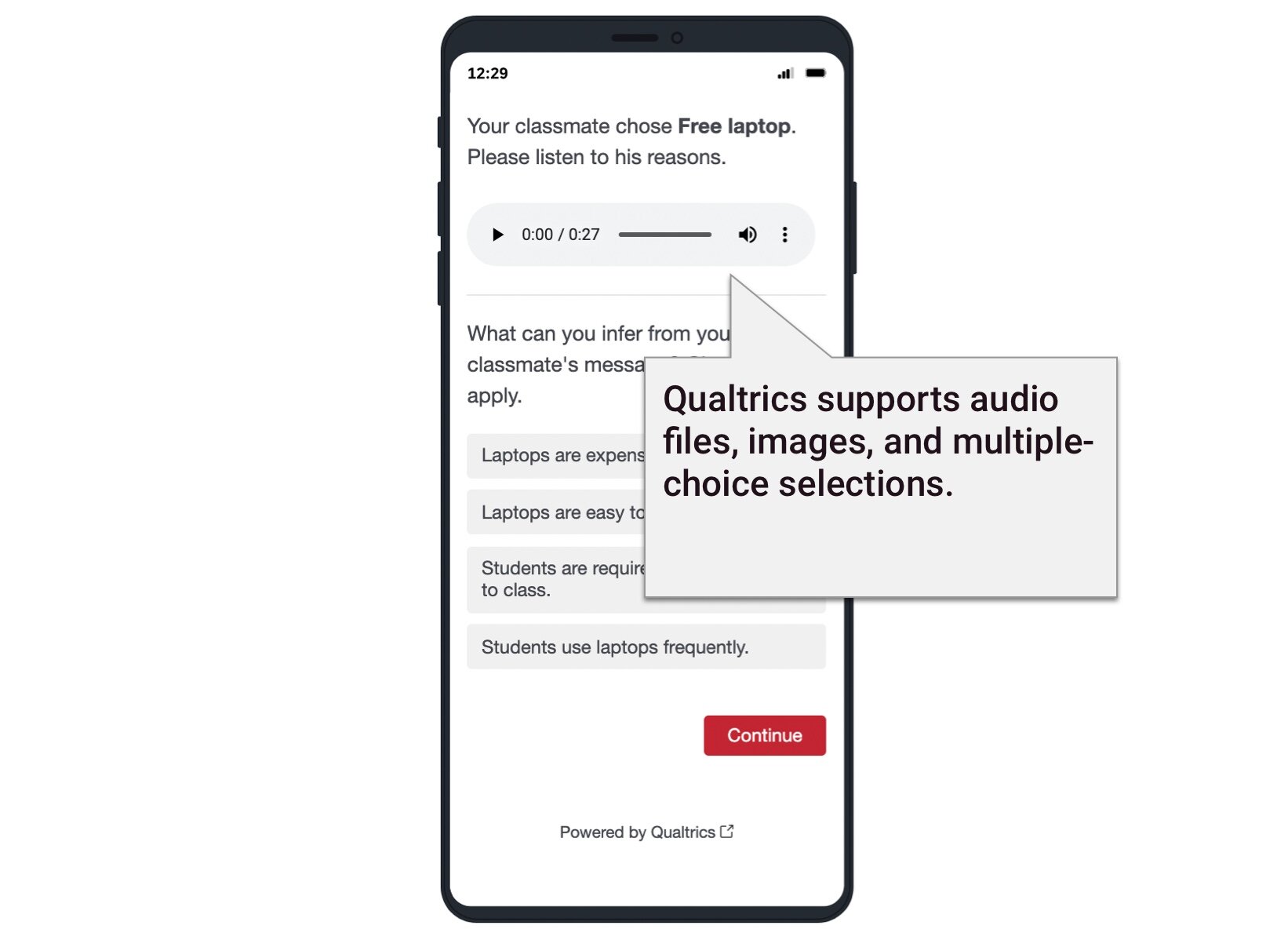

To design the study, first, I thought about in what form participants would experience the two types of activity patterns for them to provide reactions to. Probably authentic content—real language learning activities. I worked with content designers on the team to design an activity flow for both activity types, one that ended with a self-selection topic activity and the other that ended with a pre-selected one. Platform wise, I chose Qualtrics to be the test platform, given that it supports text/audio presentation and is an expert product on survey design. Below shows a part of the randomizer flow: putting a participant into any of the groups/conditions.

Secondly, I was planning around the study setup: shall participants go through both activities and share their reactions? If so, then it'd be better if the activities had different content, otherwise, the second activity in order might bring fatigue to the participants; also, the purpose of the test would be easily revealed. As a result, a 2x2 design with two types of activity content (topics) and two types of activity pattern (pre-selected and self-selected).

Lastly, I collaborated with a market analyst to work on the final Purchase Preference Questions part of the survey, where was also an indicator of the potential impact of the Activity patterns.

A few decisions about survey design were made as a team in discussions. Firstly, we discussed whether we should point out the "self-select" and "pre-selected" nature of the difference between two activity patterns when asking participants to evaluate. We decided to do so because it'd help participants think more focused on that specific aspect of the design. Also, to make sure that the participants have the opportunity to say "no, I don't see a difference" or "I don't care about this difference," we added statements that express the neutralness. However, we also wanted to get a quick-and-overall sentiment of the Activity participants just finished, so we added a general question about their satisfaction with the Activity.

Secondly, we discussed whether to insert attitudinal questions right after each Activity or shall we ask questions after both Activities were completed. The latter method was chosen in the end because we did not want to lead the participants to pay extra attention to this specific attribute while they were doing the second Activity.

RESULTS

There were multiple sets of data we could analyze:

One quick satisfaction rating question right after each activity

Questions comparing Activity 1 & 2

(Final) Purchase Preference Questions

The main tool we used to analyze these was data bar graphs, and more efforts were put in thinking clearly about which variable we were analyzing at the point, the Activity (Topic 1 vs Topic 2), or the activity pattern (Pre-selected vs. Self-select).

Overall, we found a dominating preference trend toward the capability to self-select.

IMPACT

Revisiting the main questions we wanted to find answers for, the study provided information on whether learners have a preference toward the self-select or pre-selected activity pattern — yes they do. Soon after the study results were shared, the development team started to work on developing this item type for Content Design team to scale up this type of questions for learners.